Leaders still make performance and reward decisions on distorted data. Most companies rely on a single manager’s judgment, but that narrow lens misses too much. One person cannot track every collaboration, unblock, or act of mentorship. And when reviews happen once or twice a year, the feedback is already stale. The result: misallocated rewards, disengagement, and rising politics.

Markets exist to solve this kind of problem. Public markets integrate many perspectives, update them continuously, and fold intangibles into the price alongside hard numbers. Two firms can publish identical financials yet trade at very different valuations because the market sees more than accounting can measure.

Inside organizations, the same logic applies. Every informed perspective adds a micro-signal. When combined and weighted, those signals form a live ticker of contributions. It shifts as new information appears.

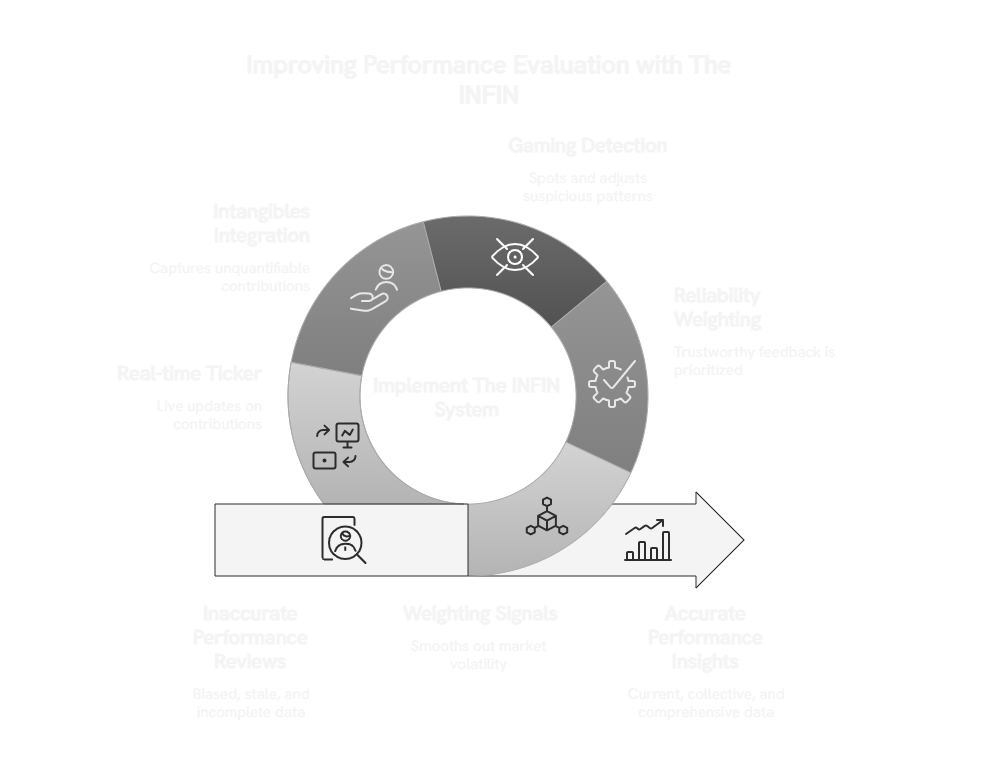

At The INFIN, this is not a metaphor. The platform brings together peer signals. It applies credibility-based weighting. It shows a live view of value creation across the company.

The outcome is sharper accuracy, fairer allocation, and faster course correction. For leaders designing performance systems, the message is clear. To stay close to reality, you need a market, not a manager.

A single boss's view is not reality. And the research proves it – ratings often reflect the manager's bias more than the employee's actual work. Two leaders can rate the same person and end up with completely different scores.

The difference doesn't come from the work itself. It comes from where each manager sits, what their needs and interests are, what they notice. That's not a training problem – that's a structural flaw baked into the system.

Think about it: your manager sees maybe 30% of what you actually do. They miss the Slack help at 9 PM. The crisis you quietly defused. The junior developer you've been coaching.

But your teammates? They see it all.

Distributed evaluation systems fix this by pulling in many informed perspectives. Each team member catches a different angle – technical expertise, problem-solving, cross-team unblock, cultural impact. No one person captures the full picture, but together the group does.

When you combine these views, individual biases normalize. The signal that emerges is fuller and more accurate than any one manager can provide. Studies show that when employees receive meaningful feedback in a week, they are 80% more likely to be fully engaged. Collective judgment simply outperforms individual assessment.

Here's the kicker: real-time updates. Distributed systems don't wait for the dreaded yearly review cycle. They update as the work happens. Recognition and course correction arrive when they actually matter.

The data backs this up. Daily feedback makes employees 3.6 times more likely to stay motivated than those stuck with annual reviews. For leaders, that means faster development, stronger retention, and a workforce that adapts in real time.

Public markets already prove this works. Countless trades combine into a price that reflects the best available collective intelligence. Sure, it looks noisy day-to-day, but over time it converges on the truth.

Your company works the same way. Many perspectives combine to form a live ticker of contributions. The outcome? Accuracy. A real reflection of reality, not some distorted snapshot through one manager's lens.

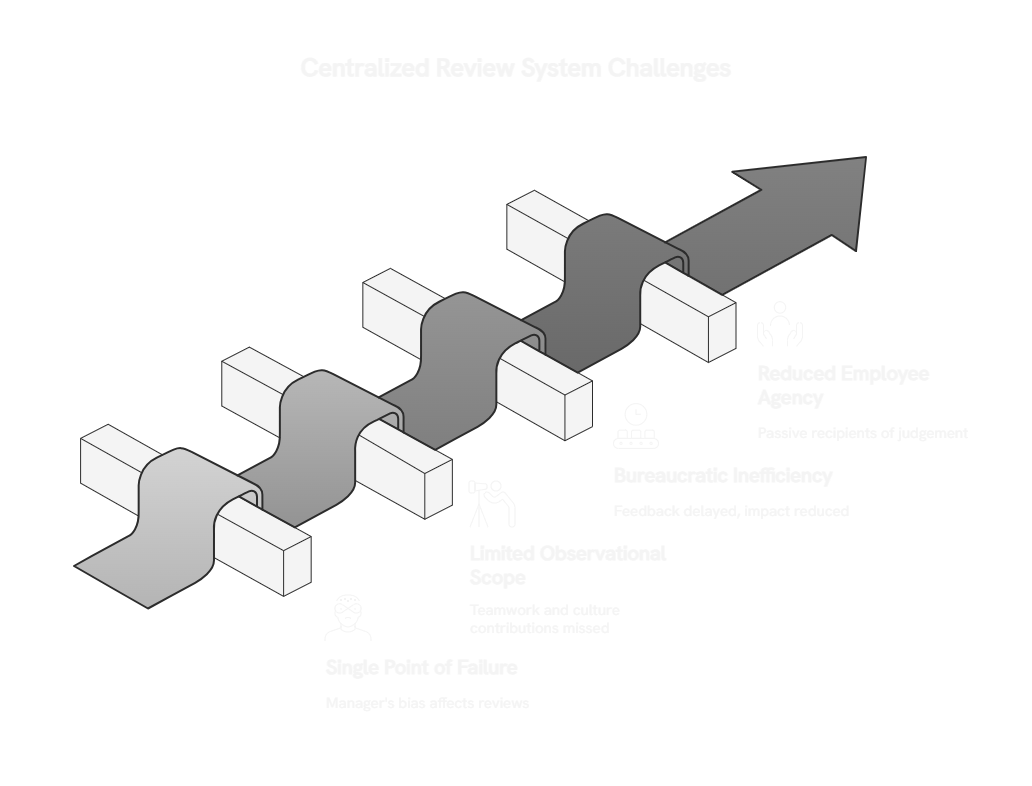

Centralized reviews aren't designed for accuracy – they're designed for control. They hand all the power to one person, usually a manager who's already juggling twelve other priorities. Then we act surprised when the system breaks down.

Here's how it always goes wrong:

Single point of failure. When your manager becomes the sole judge of your performance, their blind spots become everyone's problem. Lack of full information? Tough luck. Showing bias? Too bad. Missing the bigger picture? Well, that's your career on the line. Research confirms what we all know: centralized ratings tell you more about the rater's preferences than the employee's actual performance.

Limited observational scope. No manager sees everything – it's impossible. They catch your direct output if you're lucky. But they miss the teamwork, the cross-team heroics, the cultural work of mentoring and keeping morale up. These contributions actually sustain long-term performance, yet they're completely invisible in a boss-only review.

Bureaucratic inefficiency. The waiting game is brutal. Employees sit around for months waiting for scheduled review cycles, long after the performance moment has passed. By the time feedback arrives, it's archaeology, not coaching. That delay doesn't just weaken the system – it kills its ability to actually shape behavior.

Reduced agency. Here's the worst part: centralized systems turn employees into passive recipients of judgment. You get rated, but you can't really influence it. It's less about learning and more about compliance, which destroys engagement. In practice, this kills ownership and motivation – the exact opposite of what performance systems should achieve.

For leaders, the outcome is painfully predictable. Rewards get misallocated, talent checks out, and politics become more important than actual contributions. A system built for hierarchy ends up destroying accuracy, speed, and fairness.

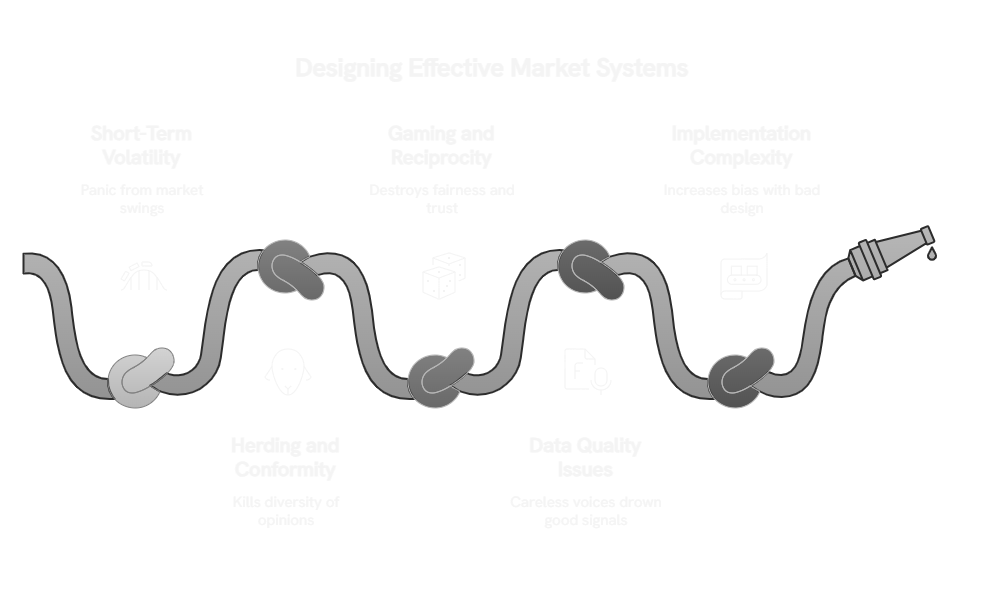

Look, market systems crush centralized reviews, but let's be honest – they're not flawless. The same forces that make them powerful can also mess things up if you're not careful. The difference is you can actually design safeguards that work.

Markets move fast, sometimes too fast. One bad day or heated argument can tank someone's rating, even when their long-term contribution is rock solid. Leaders expecting nice, steady yearly numbers might panic at the swings.

Here's the fix: treat it like a stock index. Focus on trends and averages, not every daily blip. Financial analysts smooth out market noise with moving averages – performance markets should work the same way. The long view reveals the truth, not the moment-to-moment drama.

Here's a real risk: when people see how others rated someone, they might just follow the crowd instead of thinking for themselves. This kills the diversity of opinions that makes collective intelligence actually work. Over time, you get fake consensus that looks real but isn't.

The INFIN sidesteps this by keeping ratings anonymous. You see how you stack up overall, but not who said what. Design choices like blind inputs and delayed visibility protect independence and stop the copycat problem. That friction keeps the system smarter than any single voice.

People will try to game any system – it's inevitable. Friends rate friends highly, enemies settle scores. This tit-for-tat behavior destroys fairness and can create little political cartels inside your performance system.

But you can catch it. Flag the obvious loops where two people keep giving each other perfect scores. When that happens, reduce their influence. Financial markets don't just flag manipulation – they treat it as illegal. The INFIN uses the same logic: structural safeguards preserve trust without requiring everyone to be saints.

Not all feedback is created equal. Some people provide thoughtful, evidence-based evaluations. Others rush through or don't have enough context to judge fairly. If every voice counts the same, the careless ones drown out the good signals.

The solution: weight inputs by credibility. Track who consistently provides accurate evaluations and give them proportionately greater say. This turns uneven participation into a feature – credible evaluators gain clout while careless ones fade to background noise.

The biggest risk? Bad design. Poor weighting formulas or weak governance can actually increase bias instead of reducing it. A market system without proper guardrails ends up looking arbitrary and unfair – the exact opposite of what you're trying to build.

Leaders must treat governance as priority one, not an afterthought. Clear rules, transparent weighting logic, active monitoring for manipulation – these aren't nice-to-haves, they're essential. When done right, these safeguards turn potential flaws into actual strengths. They keep the system credible and durable.

Traditional reviews obsess over what managers can count – deadlines, deliverables, project metrics. Meanwhile, the most important contributions are happening right under their noses: collaboration, cultural influence, the invisible work that keeps teams functioning. Managers just don't see it.

Take that engineer who unblocks three different teams every week. On paper? Average output. In reality? They're multiplying everyone else's productivity. Or the teammate who mentors juniors and defuses tensions before they explode – they're building resilience across the entire group.

Market systems capture these contributions because peers actually witness them and can reflect that value.

Breakthroughs start as messy experiments that don't map to anyone's OKRs. Managers under pressure to hit their numbers might completely overlook the weird side project that becomes next quarter's game-changer.

But in a distributed system, colleagues who benefit from these ideas can speak up. Creative work finally gets recognized instead of buried.

Financial markets have been pricing intangibles forever. Two companies publish identical financials but trade at completely different valuations – because brand, trust, and momentum matter. Internal markets work exactly the same way.

They reveal the full picture of contribution, not just the measurable slice that fits in a performance review template.

Employees with high emotional intelligence tend to achieve better business results. But boss-only reviews barely capture these skills – if at all. Your colleagues see emotional intelligence in action every single day. Market systems capture what managers miss.

Diverse inputs reveal contributions that metrics alone cannot capture. When you combine all these perspectives, you get a fairer view of the whole person – both the tangible output and the intangible impact that actually drives results.

Guardrails against volatility. Markets move fast – sometimes too fast for good judgment. One production bug or heated meeting can suddenly tank someone's rating, even when they've been crushing it for months.

The INFIN smooths out this noise by weighting signals across time. Leaders see steady performance patterns instead of chasing every spike and dip. Think of it like how smart investors rely on moving averages instead of panicking over daily swings.

Weighting by credibility. Here's the reality: not all feedback is worth the same. Some team members give thoughtful, evidence-based evaluations. Others rush through their inputs or rate inconsistently because they're distracted or don't really know the person's work. The system learns who to trust more.

Detection of gaming and reciprocity. People will try to game this – count on it. Friends inflating each other's scores, rivals settling grudges through ratings. Picture two colleagues who mysteriously always give each other perfect marks, regardless of actual performance. The system spots these patterns and adjusts accordingly.

Integration of intangibles. Traditional scorecards are obsessed with outputs you can count. But real value shows up everywhere else: the unblockers, the mentors, the quiet innovators. Take that engineer who helps three teammates solve critical issues every sprint – their contribution multiplies through everyone else's productivity. A boss-only review would completely miss this multiplication effect.

The real-time ticker. This is where it gets interesting. The system pulls all these signals into a live "ticker" of contributions that updates as new information flows in. For leaders, this means performance data that's actually current, built on collective intelligence instead of stale annual reviews.

For employees, it means recognition and accountability happen immediately, not nine months later when everyone's forgotten what actually happened.

You don't need to blow up your entire performance system on day one. That's a recipe for disaster and pushback. Instead, run a small pilot – add peer inputs alongside existing reviews in one team or department.

This gives you proof points and surfaces the inevitable early lessons without freaking out the rest of the organization. Plus, pilots generate your first real data set, which helps you fine-tune weighting and guardrails before you scale.

Trust is everything here. People will resist if they think the system can be gamed or if they have no clue how their input gets used. Be brutally transparent about the rules. Show them exactly how you catch manipulation.

Walk them through how raw data becomes actionable signals. When people understand the mechanics, skepticism turns into buy-in. Mystery breeds resistance.

Once your pilot proves stable, start expanding across more teams. Each new group adds different perspectives, making the data more accurate and manipulation much harder.

Phased rollouts let you handle the messy practical stuff early – figuring out cross-team dynamics, integrating with existing HR processes, all that operational complexity. Then you can confidently roll it out company-wide.

Here's where it gets real: a system only matters when it actually affects outcomes. At The INFIN, these signals directly influence pay and promotion decisions. They guide bonus distribution and recognition.

Start with light influence on rewards, then gradually move toward full integration. This sends a clear message – politics are out, contribution is in.

Markets evolve, and so do the people gaming them. Without ongoing governance, even the best system will drift toward bias or exploitation. Audit signals regularly, refine weighting algorithms, update safeguards.

Treat governance like a living function, not a one-time setup. That's what keeps the system trustworthy over time.

Rolling out a market system isn't about flipping a switch – it's about building credibility one step at a time. The goal is making fairness and visibility part of your company's DNA, not just another HR initiative.

"Won't this turn into a popularity contest?" This is always the first objection, and it misses the point entirely. Market systems aren't popularity contests because they include weighting and anti-gaming safeguards.

The system learns who gives credible, balanced feedback over time – those voices matter more than the noise. Someone trying to inflate their friends or torpedo their rivals? The patterns get detected and neutralized quickly.

"Isn't this just more bureaucracy?" Actually, it's the opposite. The INFIN automates signal collection and weighting, which cuts the admin load dramatically. No more managers spending weekends writing performance reviews.

Signals get gathered in the normal flow of work – people are already collaborating and observing each other. What looks like added complexity is actually way less overhead than traditional review cycles.

"What about sensitive feedback?" The beauty is in the aggregation. Feedback gets anonymized and combined so no single voice is exposed. The system shows contributions as collective signals, not personal attacks.

This kills the defensiveness that makes feedback sessions so painful, while still surfacing real issues that need attention.

"Will employees trust it?" Trust comes from transparency, not secrecy. Show people exactly how signals get weighted, how gaming gets caught, and how their input connects to actual outcomes.

When the mechanics are clear, confidence builds. Plus, pilots prove the system works fairly before you roll it out widely – actions speak louder than promises.

"How do we know it works?" Markets already prove this works in much higher-stakes environments. Public markets integrate information better than any individual analyst ever could.

The same principle applies here – collective intelligence beats individual judgment. Early pilots demonstrate the accuracy and fairness gains, and scaling just reinforces both benefits.

Centralized reviews don't just blur reality – they actively distort it. They reward whatever catches a manager's eye, not what actually drives team success. Market systems flip this by making contributions visible from every angle.

They turn scattered signals into a complete picture of value that's much harder to game, impossible to politicize, and actually reflects what's happening.

But this isn't just about better data – it's about fundamentally changing your culture. When recognition follows contribution in real time, accountability stops being this abstract concept and becomes just how things work.

Trust builds naturally. You end up with a healthier performance culture where people actually know their efforts matter, leaders can act on current information, and value flows to the people creating it.

Here's what you're really getting:

Leaders who adopt market-based performance systems aren't just swapping out tools. They're fundamentally redrawing the connection between effort, value, and reward. That kind of clarity doesn't just improve performance – it builds organizations that can actually endure.

The question isn't whether this works. Markets have been proving collective intelligence beats individual judgment for centuries. The question is whether you'll lead this shift or watch your competitors gain the advantage while you're still stuck with annual reviews.